This article is originally published on ContainerJournal. We are re-publishing it here.

Adoption of Kubernetes into data centers or cloud has been remarkable since it was released in 2014. As an orchestrator of lightweight application containers, Kubernetes has emerged to handle and schedule diverse IT workloads, from virtualized network functions to AI/ML and GPU hardware resources.

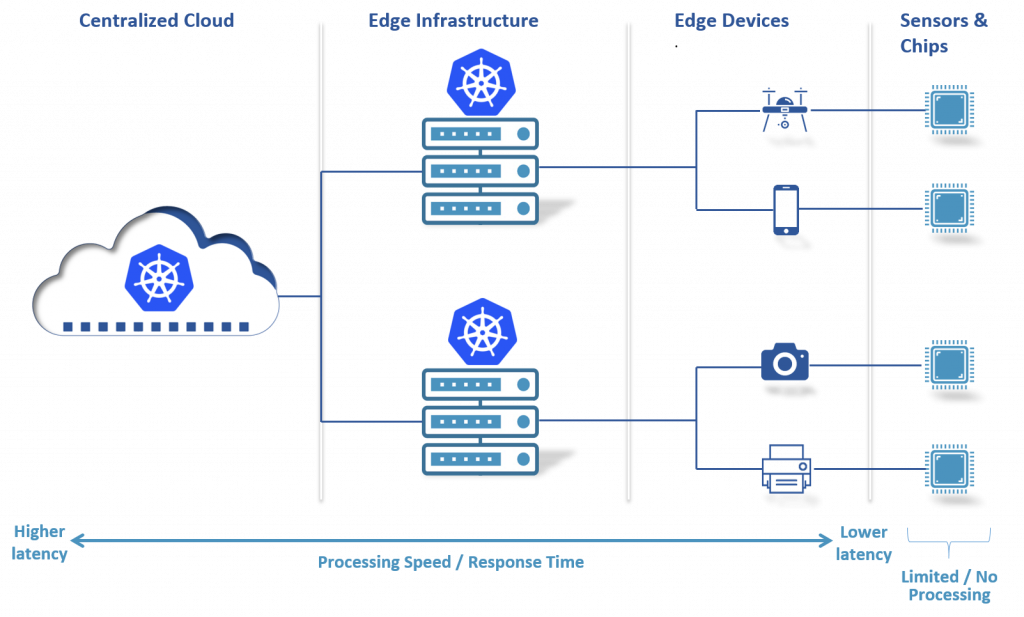

Kubernetes’ core capabilities have improved exponentially as IT workloads have become more diverse and new technologies introduced. Kubernetes is now being adopted at edge infrastructure, which has fewer capacity resources and a persistent connection to the central cloud for processing the data generated by different IoT devices.

Kubernetes has become a de facto standard for enterprises scaling up their IT infrastructure to achieve cloud-native capabilities.

Download our ebook – A Deep-Dive On Kubernetes For Edge, focuses on current scenarios of adoption of Kubernetes for edge use cases, latest Kubernetes + edge case studies, deployment approaches, commercial solutions and efforts by open communities.

However, there are challenges regarding resource and workloads management in edge-based infrastructure, as there are thousands of edge and far edge nodes to manage. Having a greater centralized control from cloud, security policies and less latency are basic features expected with edge infrastructure deployed by either enterprises as well as telecom service providers. Let us understand why and how Kubernetes will play a role to overcome such challenges.

Why Kubernetes for Edge?

Edge nodes add another layer in the IT infrastructure required by enterprises and service providers in their on-premises and cloud data center architecture. So, it is imperative for admins to manage the workloads at the edge level in the same dynamic and automated way as with on-premises or cloud.

Additionally, the whole architecture includes different types of computing hardware resources and software applications. Kubernetes is a good fit because it is infrastructure-agnostic and can manage a diverse set of workloads from different compute resources seamlessly.

For such edge-based environments, Kubernetes can be useful for orchestrating and scheduling resources from cloud to edge data center workloads. Also, Kubernetes can be helpful in managing and deploying edge devices along with cloud configurations.

In normal edge and IoT-based architecture, analytics and control plane services reside in cloud. As the flow of operations and data is from cloud to edge devices and opposite, there is a need for a common operational paradigm for automated processing and execution of instructions. Kubernetes provides this common paradigm for all the network deployments so that any policies and rulesets can be applied to the overall infrastructure. Also, policies can be narrowed down for specific channels or edge nodes based on particular configuration requirements. Kubernetes provides horizontal scaling for infrastructure and application development; enables high availability and a common platform for rapid innovation from cloud to edge; and, more importantly, pushes edge nodes to get ready for low-latency application access from different IoT devices.

Another critical requirement for edge environment can be high availability of services deployed at edge—a consideration when deciding to use Kubernetes for edge orchestration. Kubernetes is bundled with monitoring and tracking using APIs and interconnection among all cluster nodes. Moreover, containers are fast to switch between and highly resilient.

How Can Kubernetes Be Used in Edge Architecture?

In March 2019, a Kubernetes IoT Edge Working Group was formed, made up of engineers from Red Hat, VMware, Futurewei, Google, Edgeworx and others. The group was formed as a community to discuss, design and document all efforts taken for utilizing Kubernetes for edge and IoT use cases and solve challenges around it.

According to their presentation at KubeCon Europe 2019, there are three ways are available wherein Kubernetes can be used for edge-based architecture to handle workloads and resource deployments.

Let’s discuss all three approaches.

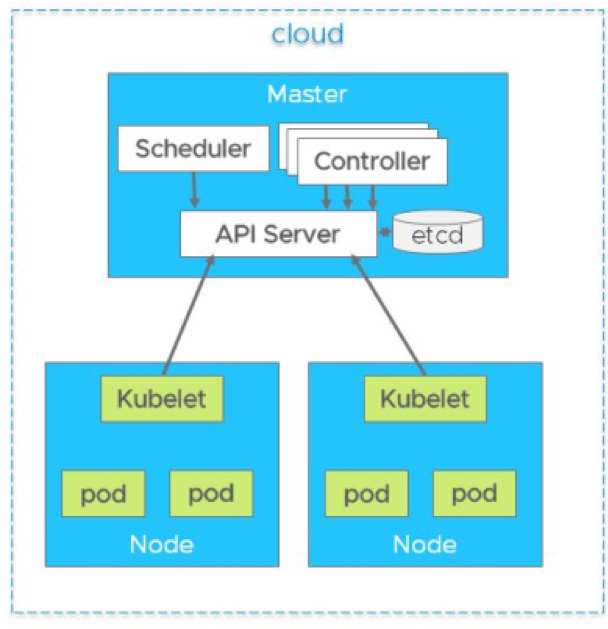

The basic Kubernetes architecture is something like this:

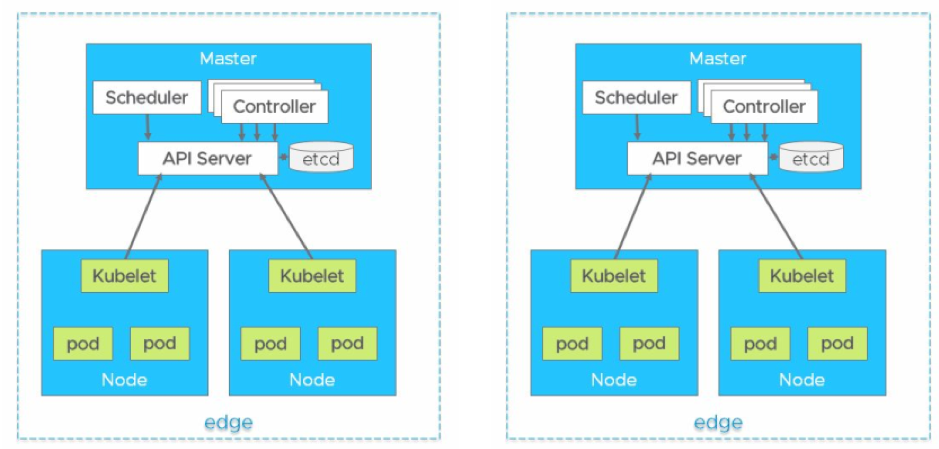

A Kubernetes cluster consists of master and nodes. Master is responsible for exposing the API to developers and scheduling the deployment of all clusters, including nodes. Nodes contain the container runtime environment, either Docker or rkt; an element called kubelet, which communicates with the master; and pods, which are collection of one or more containers. Nodes can be a virtual machine in the cloud.

For edge-based scenarios, the approaches can be as below.

- The first approach is whole clusters at the edge. In this approach, the whole Kubernetes cluster is deployed within edge nodes. This option is ideal for instances in which the edge node has fewer capacity resources or a single-server machine and does not want to consume more resources for control planes and nodes. K3s is the reference architecture suited for this type of solution. k3s is wrapped in a simple package that reduces the dependencies and steps needed to run a production Kubernetes cluster, making the clusters lightweight and better able to run on edge nodes.

Figure 3: Rancher K3s as a reference

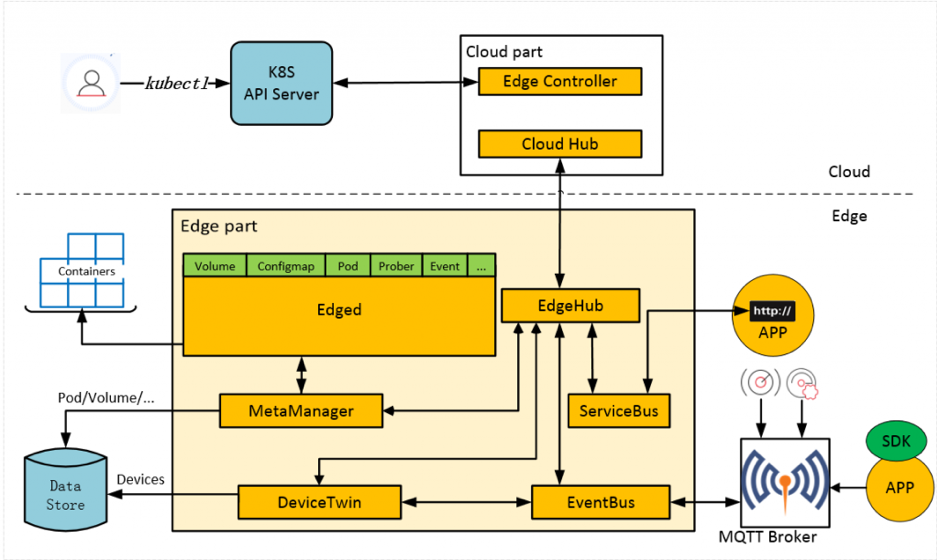

- The second approach of using Kubernetes for edge is referred from KubeEdge. It is based on Huawei’s IoT Edge platform, Intelligent Edge Fabric (IEF). In this approach, the control plane resides in the cloud (either public cloud or private data center) and manages the edge nodes containing containers and resources. This architecture allows support for different hardware resources at the edge and enables optimization in edge resource utilization. This helps to save setup and operational costs significantly for edge cloud deployment.

Figure 4: KubeEdge as a reference

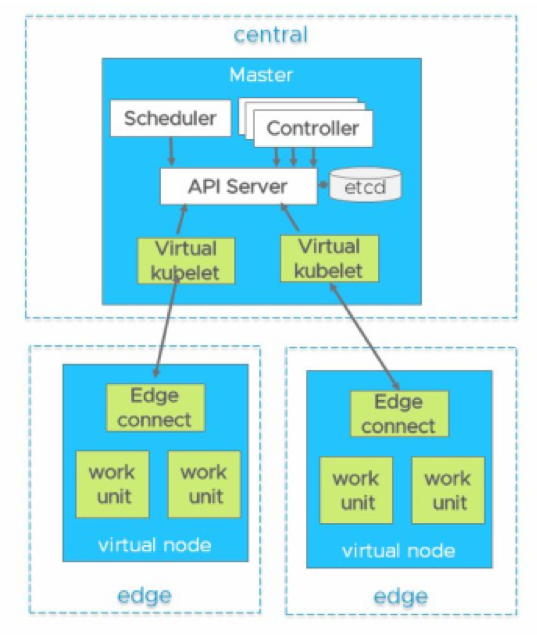

- The third option is hierarchical cloud plus edge, in which a virtual kubelet is used as reference architecture. Virtual kubelets reside in the cloud and contain the abstract of nodes and pods deployed at the edge. Virtual kubelets get supervisory control for edge nodes containing containers. Using virtual kubelets enables flexibility in resource consumption for edge-based architecture.

There are infrastructure, control plane and data plane-related challenges in using Kubernetes for edge and IoT use cases. Challenges include how to manage resources and workloads at the edge as well as communication by edge sites with cloud and themselves.

Summary

Kubernetes add many enhancements and feature sets for edge-based network infrastructure.

- Streamlines workloads and resource management using policy based scheduling.

- Adds security and networking features.

- Enables auto-scaling and traffic shaping for better resource utilization and workload prioritization.

Apart from Kubernetes Edge IoT working group community, there are key developments are in progress by many companies to integrate and utilize Kubernetes power for edge and IoT. I will cover more details in upcoming articles about Kubernetes for edge.