Kubernetes started in 2014. For next two years, the adoption of Kubernetes as container orchestration engine was slow but steady, as compared to its counterparts – Amazon ECS, Apache Mesos, Docker Swarm, GCE, etc. After 2016, Kubernetes started creeping into many IT systems that have wide variety of container workloads and demand higher performance for scheduling, scaling and automation. This is so as to enable cloud native approach having a microservices architecture in application deployments. Leading tech giants (AWS, Alibaba, Microsoft Azure, Red Hat) have started new solutions based on Kubernetes and in 2018, they are consolidating to build a de-facto Kubernetes solution which can cover every use case that handles dynamic hyperscale workloads.

Two very recent acquisitions depict how Kubernetes has created a huge impact in IT ecosystem. One is IBM’s Red Hat and VMware’s Heptio acquisition. IBM did not shown the direct interest to target container orchestrations but had eyes on Red Hat’s Kubernetes Based Openshift.

At VMworld Europe 2018, an acquisition of Kubernetes solution firm Heptio by VMware triggered a lot of heat in VMworld Europe. This acquisition is said to have a significant impact on data center ecosystem where Red Hat(IBM) and Google are among the top players. Heptio’s solution will be co-integrated with VMware’s Pivotal Container Services (PKS) to make this as a de-facto Kubernetes standard which will cover maximum data center use cases from private, multicloud and public cloud.

Heptio was formed by ex-Google engineers Joe Beda and Craig McLuckie back in 2016. In its 2 years Heptio captured the eye balls of industry giants with its offerings and contribution to cloud native technologies based on Kubernetes. Also, Heptio had raised $33.5 million thought two funding rounds.

So, the question is why and on which kind of use cases Kubernetes is being used or being tested to use.

Download our ebook to know more about the Kubernetes technology and industry/market insights.

Enabling Automation and Agility in Networking with Kubernetes

Leading Communication Service Providers (CSPs) are demonstrating 5G in selected cities. 5G network will support wide range of use cases with a lowest possible latency and high bandwidth network. CSPs will need to deploy network services at edge of the network where data is generated by number of digitally connected devices. To deploy services at edge of the network and have a control on each point of network, CSPs will need automated orchestration on each part of the network. Also, as software containers are being adopted by CSPs to deploy Virtual Network Functions, CSPs will be leveraging cloud native approach by employing microservices based network functions and real time operations by employing CI/CD methodologies. In this scenario, Kubernetes is emerged as enterprise level container management and orchestration tool. Kubernetes brings a number of advantages in this environment.

Jason Hunt wrote in his LinkedIn post “Kubernetes allows service providers to provision, manage, and scale applications across a cluster. It also allows them to abstract away the infrastructure resources needed by applications. In ONAP’s experience, running on top of Kubernetes, rather than virtual machines, can reduce installation time from hours or weeks to just 20 minutes.” He added that, CSPs are utilizing mixing of public and private clouds for running network workloads. Kubernetes works well for all types of clouds to handle workloads of any scale.

Other example of utilization of Kubernetes in telecom network is a recent release of Nokia CloudBand software for NFV. With this release of CBIS 19, a support for edge network deployments along with support for containerized workloads and integration of Kubernetes for container management along with OpenStack which will handle virtual machine as well. Since last few years usage of containers is being discussed within NFV architecture. But this release is one of the first representation of employing containers and container management for handling network functions in NFV infrastructure.

Kubernetes and AI/Machine Learning

KubeFlow – Managing machine learning stacks

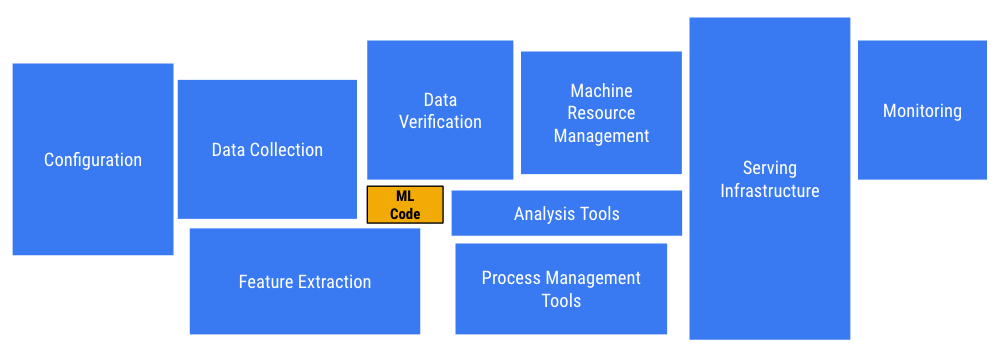

Moving further on managing containers, kubernetes is evolved so much that enterprises started using it to manage complex workloads for machine learning applications. Machine learning application or systems contain several software components/tools/libraries from different vendors which are all integrated together to process information and generate output. Connecting and deploying all the components and tools require manual efforts which are tedious and takes a fair amount of time. Also, for most of the cases the hardest part is that the machine leaning models are immobile, require re-architecture while transferring from development environment to highly scalable cloud cluster. To address this concern, Kubernetes introduced open framework KubeFlow which has all machine learning stacks pre-integrated into Kubernetes which will instantiate any project easily, quickly and extensively.

Image source: https://www.kubeflow.org/blog/why_kubeflow/

$JD Chief Architect Haifeng Liu discusses the importance of open source and future collaboration with @CloudNativeFdn at #LinuxCon + #ContainerCon + #CloudOpen China 2018 pic.twitter.com/d33eCA3QdE

— JD.com (@JD_Corporate) June 27, 2018

Kubernetes for eCommerce retailer JD.com

Besides launch of KubeFlow, one interesting application of Kubernetes for AI is JD.com, a Chinese e-commerce retailer, which is managing world’s largest Kubernetes clusters with more than 20,000 bare metal services in several clusters across data centers in multiple regions.

In an interview with CNCF, Liu Haifeng (Chief Architect, JD.com) when asked about how Kubernetes helping JD for AI or big-data analytics, he disclosed.

“JDOS, our customized and optimized Kubernetes supports a wide range of workloads and applications, including big data and AI. JDOS provides a unified platform for managing both physical servers and virtual machines, including containerized GPUs and delivering big data and deep learning frameworks such as Flink, Spark, Storm, and Tensor Flow as services. By co-scheduling online services and big data and AI computing tasks, we significantly improve resource utilization and reduce IT costs.”

JD.com is declared as winner as top end user award by CNCF for its contribution to cloud native ecosystem.

Managing hardware resources using Kubernetes

Kubernetes can also be used to manage hardware resources like graphics processing units (GPUs) for public cloud deployments. In one of the presentation at KubeCon Chine this year, Hui Luo a software engineer at VMware demonstrated how Kubernetes can be used to handle machine learning workloads in private cloud as well.

Summary:

As enterprises started embracing open source technologies in considerable manner to reduce costs, it has been observed that Kubernetes has been evolved from just a container orchestration framework to handling even more complex workloads of different types. Even though most of the software industry has leaned towards cloud native, dividing monolithic applications in small services which can scale, managed independently and communicate among themselves through APIs, Kubernetes has become a de-facto standard to completely take care of all services residing in containers. Similar mechanism of Kubernetes has been adopted to handle NFV, machine learning and hardware resources workloads.

[Tweet “Evolvement of Kubernetes to Manage Diverse IT Workloads ~ via @CalsoftInc”]