This article is originally published on TheNewStack. We are re-publishing it here.

Kubernetes, since its inception, has emerged as a leading application orchestration framework for any data center; from private, public cloud to far edge sites. Enterprises are demonstrating Kubernetes for orchestrating containers deployed at the edge, and also handling a variety of workloads.

Kubernetes has been initially used to manage containers in which application can be hosted along with a minimal amount of storage. In this manner, the first containerized applications followed the microservice design pattern. As it matures, Kubernetes is graduating to hosting more challenging stateful workloads. The new trend is to orchestrate storage workloads in pods to extend portability as well as cloud native benefits to data storage for applications. It makes easy for IT admins to take along the storage for certain applications while moving to another data center, or another public cloud vendor. That is hugely important from the perspective of the CIO.

Download our ebook – A Deep-Dive On Kubernetes For Edge, focuses on current scenarios of adoption of Kubernetes for edge use cases, latest Kubernetes + edge case studies, deployment approaches, commercial solutions and efforts by open communities.

Ceph is a widely used software-defined storage system providing multimodal (object, block and file storage) data access on networked servers. Ceph has its deployment and operational complexities, which operators are currently managing themselves. These stem from Ceph’s enormous flexibility typical of an Open Source project: Ceph exposes a very large number of tunable settings and expert operators revel in tuning their clusters in detail.

As Kubernetes adoption becomes widespread, it will become obvious to design data centers, keeping in mind the hosting needs of diverse types of data and the orchestration of this data in a dynamic manner. Also, in the world of containerized microservices, the requirement for data and application portability will require a simple point solution that fully utilizes the power of flexible data center tools like Kubernetes and Ceph.

Rook: Merging the Power of Kubernetes and Ceph

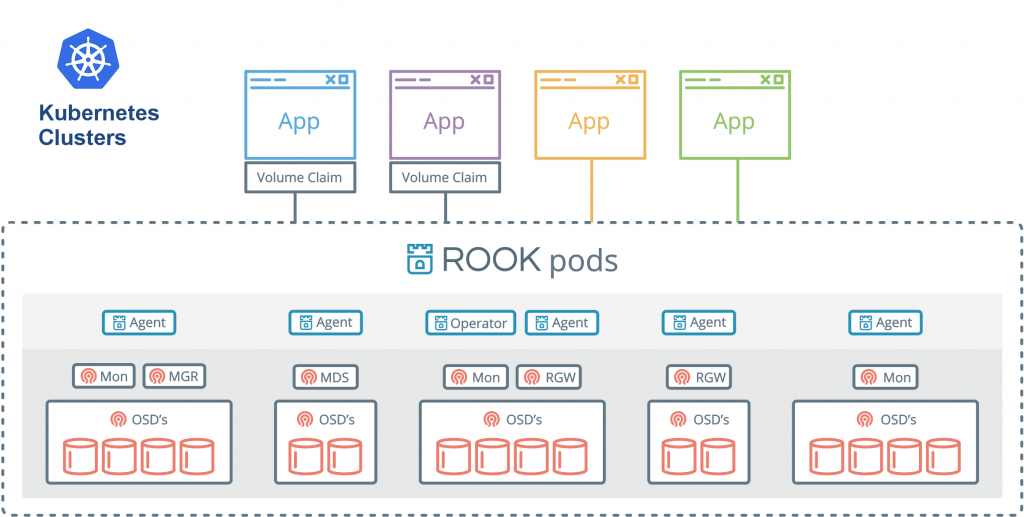

Here comes Rook, which ties the Red Hat-managed open-source Ceph scale-out storage platform and Kubernetes to deliver a dynamic storage environment for high performance and dynamically scaling storage workloads. It is a storage orchestrator for cloud-native environments.

Along with the utilization of storage systems like Ceph, Rook eliminates Kubernetes storage challenges like dependency on local storage, cloud providers vendor lock-in and day-2 operations within the infrastructure.

Rook is a Kubernetes storage operator that can help deploy and manage Ceph clusters and monitors those clusters for compliance with the desired runtime configuration. “Operator” is a new Kubernetes buzzword, bringing home the sense that admins only declare the desired state of Ceph cluster and Rook watches changes in state and health, analyses differences in state, and applies the configuration instructions mentioned in state.

Rook brings orchestration benefits to Ceph storage clusters like simplified deployment, bootstrapping, configuration, provisioning, scaling (up and down), upgrading, migration, monitoring and resource management.

Normally, Ceph is used to automate the storage management and Rook can sit on top of Kubernetes clusters to automate administrator-facing operations, offloading the storage team from the need to run day-to-day operations.

Summary

Kubernetes has enabled applications to be cloud native, but application storage has not been enabled with cloud native features from the start. Rook bridges that big gap by making storage cloud native in conjunction with Ceph and other storage systems. What benefits Kubernetes brought to applications deployed in containers, in the same way Rook has done for storage, bringing dynamic data storage orchestration features to modern data centers. Rook also looks promising with its impressive ability to reduce the effort required of a data center’s storage team Increased use of Rook in modern data center design promises to free storage from lock-in as Kubernetes has done for compute.

For more technical details, refer presentations by Sean Cohen, Federico Lucifredi and Sébastien Han at OpenStack Summit 2019 and KubeCon 2019.