Everyone is fascinated about the upcoming 5G telecom network, which will boost innovative technologies and bring new use cases and architectures to enhance the digitally connected world. Edge computing is one of the major features of 5G that will change the way devices have interacted with centrally located data centers. Ultra-low latency, higher bandwidth and secure data transmission are the core requirements of upcoming use cases like AR, VR, Connected/Autonomous Cars, IoT, IIoT, etc. Network edge will be addressing these demands.

A network edge is imagined as a mini data center, physically close to the digital devices, where data generated by digital devices like sensors (in IoT case), smartphones, autonomous cars, industrial equipment, etc. is processed, aggregated, summarized and tactically analyzed and forwarded the refined or resultant data to the cloud. It helps in eliminating the need to send a volume of data to the cloud, which reduces network traffic. This has an impact on network response time and transmission costs.

The Problem

But, the question with edge is the actual delivery of services to consumers with dynamic performance and scaling as needed.

Edge nodes varying with different sizes with diverse resource requirements. Like, some of the edge nodes are smaller dedicated for specific tasks; some might be dedicated for telco specific deployments or dedicated nodes for football stadium. Additionally, such edges will be spread across thousands of locations, which are all connected to a central cloud or data centers. Workloads on edges will be significant and will continuously increase as per growth in connected devices. Additionally, edge infrastructure will be equipped with software and hardware components from different types of vendors. These components will have different types of mechanisms for processing and communicating and will be continuously integrated, monitored and updated for launching new services to end consumers. Overall, the edge will be a highly networked set of mini data centers that are physically close to digital devices and customers.

AT&T’s Edgility – Bringing Serverless Capability at Edge

AT&T developed an initiative called “Edgility” to address these at the network edge. Edgility started up as AT&T initiative for compute to contribute to Akraino Edge Stack that is AT&T-led Linux Foundation project for Edge stack.

The idea behind Edgility is to implement serverless functionality to serve the events triggered by use cases, like IoT, utilizing less computing resources at the edge.

Let’s discuss the architecture of Edgility: What frameworks are used, how serverless functions and Akraino Edge Stack.

A 5G network based on edge architecture will have a central cloud/data center and hundreds of edge nodes connected to it and continuously sharing data. In Edgility, each node is based on Akraino Edge Stack. Every edge node deployment

AT&T is a founding member of Akraino Edge Stack, which launched in February 2018. AT&T supplied the seed code designed for carrier-scale edge computing applications running in virtual machines and containers to support reliability and performance requirements to jump-start the Akraino Edge Stack community. It is a complete and open software developer platform for edge computing systems and applications. is characterized by its cloud-native approach; based on microservices architecture wherein workloads can be managed using both containers and virtual machines for reliability and high performance of application requirements. It offers scalability of cloud-based services and improved utilization of resources.

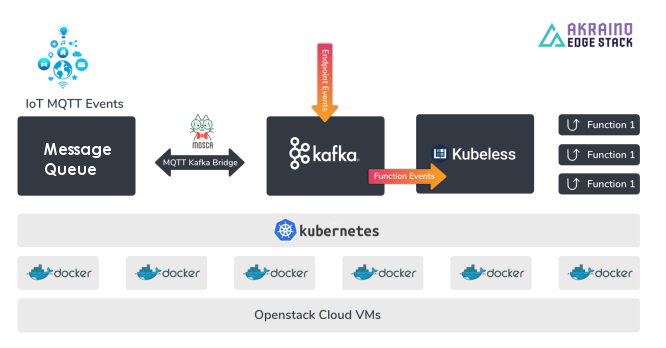

In Akraino Edge Stack-based Edgility architecture, the major component is, Kubeless (Kubernetes based serverless framework) that integrates into Akraino Edge Stack to enable the function as a service (FaaS), which in itself can be deployed on top of Kubernetes clusters, empowered with advantages offered by Kubernetes.

Here is the Akraino Edge Stack for enabling serverless function. The figure shows an example of IoT MQTT events produced with Message Queue (from left side) and endpoint events produced with Kafka and processing those events through Kubeless.

Akraino Edge Stack provides a microservices architecture in which workloads are deployed, mainly in containers that have smaller footprints in a computing environment. Integrating FaaS offered with Kubeless further moves ahead from microservices to smaller functions, which can be called when a certain event is triggered. Until then those functions will sit idle without utilizing any resources at the edge, allowing an active service to consume available computing power. This mechanism breaking microservices into different functions is at the core of the Edglity platform.

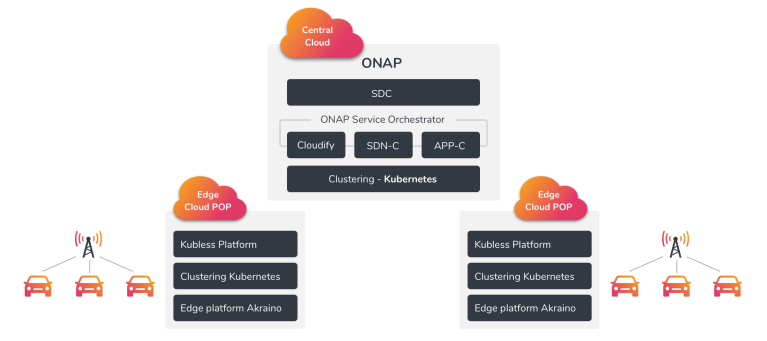

AT&T’s other leading open source contribution to Linux Foundation, Open Network Automation Platform (ONAP), is used for design, deployment and orchestration of Akraino Edge Stack.

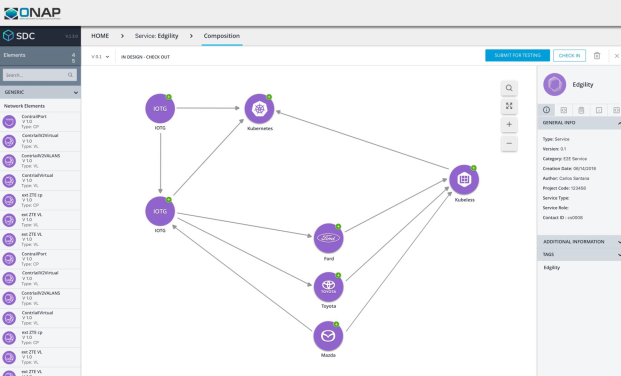

At ONAP, Service Design and Creation (SDC) is used to create blueprint models and Cloudify and TOSCA are used as service orchestrator to deploy the blueprint models in network containing multiple edge stacks. At the ONAP level, as well as edge node i.e. Edge cloud POP, clustering of functions are done using Kubernetes. Monitoring and visualization of the overall network is done with the use of Grafana and Prometheus, which are integrated with ONAP.

Contribution by Edgility for Akraino Edge Stack

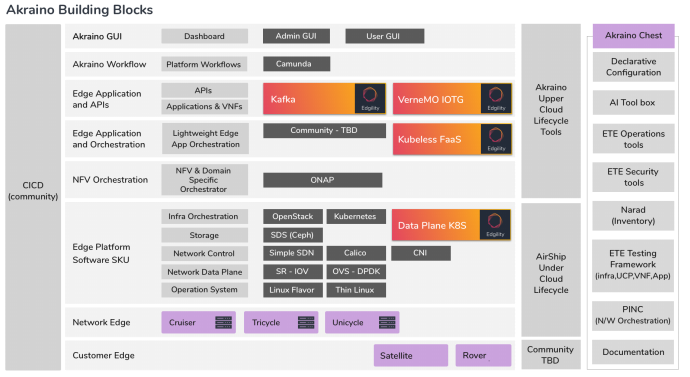

Each edge cloud POP is based on Akraino Edge Stack. However, to complete enablement of FaaF or serverless edge the Edgility team has contributed to Akraino by integrating Kafka, Message Queue IOTG, Kubeless and Data Plane Kubernetes.

Predictive Operations in Second Phase

This represents the first phase of Edgility wherein deployment and operations are successfully tested for real-life use case: Intelligence Transport System. The second phase of Edgility will be equipped with artificial intelligence and machine learning capabilities provided by integrating another Linux Foundation open source framework Acumos. With the integration of Acumos, Edgility platform can have predictive orchestration of services functions as well as dynamic resources utilization based on analysis provided with ML tools of Acumos.

Business Advantages of Edgility

With a vast and complex 5G network, and thousands of edge nodes, having a well-equipped and high-performance infrastructure at edge tends to increase the CAPEX for service providers to offer all the services. A limited set of IT resources and computing power might raise concern over availability and reliability of edge services. Edgility brings up the serverless or FaaS methodology while deploying edge application services. Serverless is being adopted by enterprises for saving cost at public cloud wherein cost is measured only when a function is called and resources are utilized. In a similar way, Edgility can help make optimal use of resources for active services/functions keeping inactive services idle. Overall, Edgility creates an event-driven and high-performance platform with microservices and its functions are hosted in such a way where it can utilize public cloud as well as external data centers. Service providers will gain control over how they want to host functions workloads to save on CAPEX investments, along with cloud-native benefits.

Summary

Edge computing is the critical architecture for enabling most 5G use cases. However, requirements and expectations from edge node in terms of computing resources are in question, and with the growing number of devices and data, it could become cumbersome. Edgility addresses this gap by offering a serverless edge node in which computing resources will be utilized and optimized in an appropriate way to accumulate varying data processing demands.

[Tweet “Serverless at the Edge: Resolving Resource Utilization Concerns ~ via @CalsoftInc”]