The data of any organization is of extreme value. But what happens when that data is not trustworthy and accessible to your teams? You will face challenges because of data silos, insufficient information, and inconsistent records. These problems do not just affect the bottom line; they stifle the ability of the company to innovate, grow, and stay agile.

According to research reports, with an estimated value of $30.35 billion in 2023 and projected to reach $94.16 billion by 2036, the role of integrating and managing trustworthy data has never been more apparent. Therefore, the Product Lifecycle Management (PLM) frameworks for software development for PLM-based products are crucial for maintaining data integrity.

This blog details how PLM can transform software development, especially for products that rely on large language models (LLMs). Dive into the blog to learn through each stage of the PLM process, from planning and requirements gathering to development, testing, deployment, and ongoing maintenance.

PLM in Software Development for LLM-Based Products

Product Lifecycle Management (PLM) is a structured approach that aims to direct any phase of software development from conception to retirement. In the case of software with LLM, the role of PLM becomes even more complex. This is primarily due to specific computational, data, and ethical considerations in AI models.

These models perform unpredictable higher-order tasks that depend upon various factors. Thus, implementing the PLM principle in the final product is necessary for business objectives, compliance norms, and user demands.

Key elements of PLM in LLM-based software

Implementing Product Lifecycle Management in software development for LLM-based products involves several key elements:

- Customize PLM Stages: Adapt each PLM phase to fit your LLM’s unique needs, including iterative learning, data dependencies, and ethical concerns.

- Manage Your Data: Ensure you have high-quality, diverse datasets for training while maintaining data integrity and privacy compliance.

- Allocate Computational Resources: Set aside enough processing power to handle the demands of training and deploying your LLM.

- Focus on Ethics and Compliance: To maintain user trust and compliance, tackle issues like bias, and meet legal standards.

- Monitor and Maintain Continuously: Keep an eye on model drift and performance issues so you can update and retrain as needed.

- Engage Stakeholders: Make sure your product goals align with business objectives to get the most from your resources.

By focusing on these elements, the development and maintenance of LLM-based software can be streamlined. Now let us explore each stage of the PLM process.

LLM-Based Software Development: Planning

Planning is the first step of any Product Lifecycle Management strategy and is crucial in developing LLM-based software. This stage outlines project objectives, data requirements, technical specifications, and expected difficulties. Thus, you will have a solid foundation for every step of the process in the life cycle.

Core Objectives of the Planning Stage

Unlike traditional software, which may run on set logic, LLMs read and write data in less predictable ways. The software’s objective could be to improve customer service, automate content, or provide predictive analytics.

- This planning stage specifies the data type, volume, source, compliance, and ethical requirements.

- Technical specifications define the technical requirements, including model architecture, computation resources required, and the target deployment environment.

- Identify all the potential risks-model drift, data bias, or ethical concerns.

- Define key performance indicators that would be measured in the model, such as accuracy, user satisfaction, response time, or the ability to comply with privacy standards.

Pre-planning for the requirement of data in LLM

Data is the backbone of any LLM, but it dictates how the model understands language and produces related responses. In planning, you can break down the data requirements in the following ways:

- Data Volume and Variety: You should use large volumes of data to train high-performing models. However, the data must be sufficiently diverse to improve generalization.

- Quality Control and Preprocessing Requirements: Data has to be strictly preprocessed to remove noise, errors, and biases that may mislead the model.

- Privacy and Compliance: Most jurisdictions enforce strict laws on data handling. The planning phase ensures that all data collection methods comply with such laws.

Once the planning phase confirms the project’s goals, data requirements, and technical needs, you can focus on gathering all the detailed requirements.

Requirements Gathering for LLM-Based Software

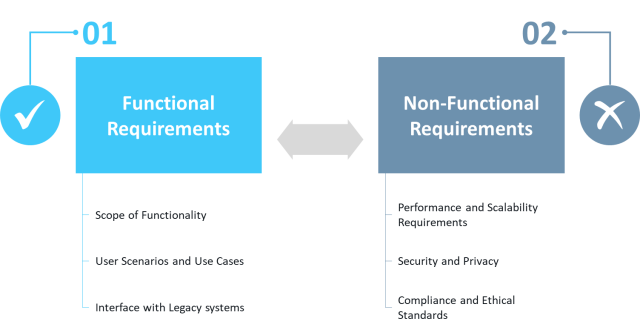

Requirements gathering is a comprehensive activity that defines what the LLM-based software must achieve and how it should perform. This step includes identifying functional requirements for the system’s actions and non-functional requirements for how it should behave under specific conditions.

Compared to traditional software, an LLM-driven product is much more challenging to gather requirements for as LLMs introduce data management needs, ethical concerns, and unpredictable model behavior.

Critical Areas of Requirements Gathering

Functional Requirements

- Scope of Functionality: These describe the significant functionalities that the model is to carry out, such as language processing, sentiment analysis, or even query generation.

- User Scenarios and Use Cases: Identifying generic user scenarios, such as an inquiring customer service chatbot, makes the purposes LLMs would address clearer to end-users.

- Interface with Legacy Systems: Since these products would be an adjunct to their existing software and hence compatible, you should describe clear integration points into other software tools and applications.

Non-Functional Requirements

- Performance and Scalability Requirements: LLMs consume resources. The requirements gathering will need to specify the expected performance and scalability requirement; hence, the system will need to handle fluctuations in workload.

- Security and Privacy: LLM-based systems must be highly secure in health care or finance domains. Requirements should mention encryption, how the data would be treated, and access privileges for protecting the user’s data.

- Compliance and Ethical Standards: With LLMs, ethical considerations extend to mitigating model bias and ensuring responsible AI use.

Practical Steps for Requirements Gathering

When gathering requirements for LLM-based software, it’s essential to take a structured and adaptable approach. Here are some practical steps and challenges to keep in mind:

- Gather input from end-users, managers, and technical teams for a well-rounded view of requirements.

- Outline data sources, volumes, and privacy needs upfront to avoid bottlenecks later.

- Track each requirement across the project to stay aligned with goals.

At this stage, with clearly documented and aligned requirements from the project objectives comes the model development.

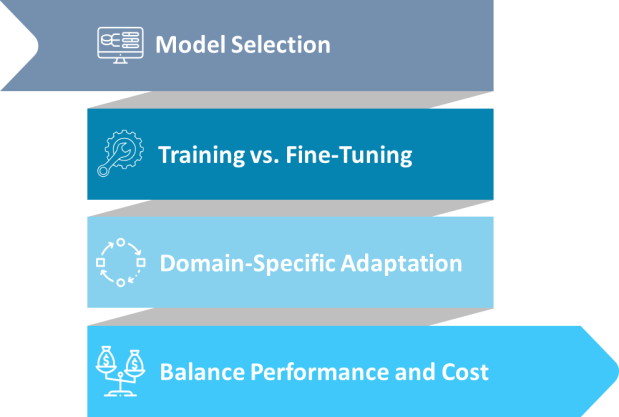

Model Development and Prompt Engineering for LLM-Based Software

After planning and requirement gathering, the second phase in Product Lifecycle Management is model development. This step converts the objectives and requirements documented to an executable model prepared for integration.

Key Elements of Model Development

Model development for LLM-based software requires careful planning and optimization. Here are the key elements to focus on:

- Model Selection: Choose a suitable model based on task needs, dataset size, and computational resources.

- Training vs. Fine-Tuning: Decide whether to train from scratch or fine-tune an existing model for your specific application.

- Domain-Specific Adaptation: Tailor the model to the relevant industry or use case to increase accuracy and relevance.

- Balance Performance and Cost: Use techniques like model pruning to manage costs without sacrificing quality.

These elements ensure you build an efficient, cost-effective model that meets project goals and user expectations.

Role of Prompt Engineering in LLM Performance

Prompt engineering is the art of producing input that can elicit the most accurate and relevant output from an LLM. It is necessary to obtain a model that meets requirements and remains efficient in processing and response accuracy.

Here are the key elements to focus on:

- Use specific, context-rich prompts to lead the model toward accurate, relevant outputs.

- Provide examples within prompts or use logical steps to enhance the model’s understanding, especially for complex tasks.

- Standardize prompts for consistency and ease of management across applications.

- Continuously refine prompts based on testing to improve output quality and relevance.

After you develop and engineer prompts, it’s time to rigorously test the model to ensure reliable performance and meet project goals.

Testing and Quality Assurance for Software Integrated with LLM

The Test stage of the Product Lifecycle Management process is critical. It ensures that the model’s outputs align with the project’s goals, user expectations, and ethical standards.

QA in LLM-based software goes beyond regular software testing since it cannot determine the outcome of responses in AI. Hence, testing must cater to functional accuracy and non-functional factors, including bias, safety, and compliance.

Types of Testing for LLM-Based Products

Testing LLM-based products involves several types to ensure they meet performance, ethical, and functional standards. Here’s a quick overview:

Functional Testing

- Accuracy Testing: For a model to deliver correct answers on the various applications, ensuring its reliability in producing relevant, accurate answers.

- Output Validations: Against the output and set rules or with known correct answers, on use cases, such as how to answer known responses, like for the customer-inquiry answering service.

- Simulation of Real-World Use Case: This simulates common scenarios, such as customer servicing queries, to assess its use case in response generation and practicality.

Performance Testing

- Latency Testing: Applications that demand in-real-time responses, like a chatbot, would test the response time. You need to fine-tune the models to deliver responses as quickly as possible but not at the cost of quality.

- Scalability Testing: Applications with a significant traffic volume will demand scaling testing to ensure that heavy loads do not degrade model performance.

Bias and Fairness Testing

- Bias Detection and Mitigation: LLMs may produce biased or inappropriate responses when the training data is inherently biased. Testing for fairness involves checking responses across various scenarios and demographics to ensure unbiased outputs.

- Ethical Compliance Testing: This testing is particularly relevant in sensitive domains such as healthcare. It ensures that the model respects user privacy, provides accurate information, and does not cause harm.

A/B Testing for Continuous Improvement:

- Iterative Testing and Refinement: Through A/B testing, you can refine the model’s responses and compare them under different conditions based on user feedback and performance metrics. Such testing enables incremental improvements in the model’s accuracy and user satisfaction.

Safety and Compliance Testing:

- Regulatory Compliance Checks: For applications dealing with sensitive data, conduct testing checks to ensure compliance with privacy laws like GDPR, CCPA, or HIPAA.

- Content Moderation: Public-facing applications should undergo testing through content moderation, which you can expect to filter out undesirable or inappropriate responses.

With testing complete, it’s time to focus on deployment strategies that bring LLM-based software products to life.

Deploying LLMs within Software Products

Deploying LLMs within software products involves selecting the proper integration approach. A robust deployment pipeline with CI/CD practices enables seamless updates and scalability.

Important Deployment Models

Deployment models for LLM-based software vary based on response time needs, scalability, and cost efficiency. Here’s a look at two important models:

- Real-Time Processing (API Integration):

- Immediate responses via API are ideal for chatbots.

- Requires high computational power and low latency.

- Needs load balancing for stability under heavy traffic.

- Batch Processing:

- Processes data at scheduled intervals, suitable for tasks without immediate response needs.

- Cost-effective, as it reduces infrastructure demands.

- Great for analyzing large datasets, like customer sentiment analysis.

Each model has unique advantages depending on response requirements and resource availability.

Cloud Native Deployment vs. On-Premises

Cloud and on-premises deployment are two primary options for deploying LLM-based software, each with distinct benefits and challenges.

| Deployment Type | Advantages | Challenges | Security Measures |

| Cloud Deployment |

|

|

|

| On-Premises Deployment |

|

|

|

After deployment, maintenance and monitoring adjusts according to changing data, user needs, and compliance standards are consistent.

Maintenance and Model Updates

LLM-based software PLM maintenance is an ongoing task to optimize model performance and ensure compliance with regulatory demands. This phase is more than a technical adjustment. It incorporates user feedback so that the model is precise and does not deteriorate with time.

Essential Components of LLM Maintenance

Maintaining LLM-based software ensures long-term performance, relevance, and compliance. Here are essential components to focus on:

- Model Monitoring: Track performance metrics like accuracy and response time to detect issues early.

- Address Model Drift: Regularly retrain or fine-tune to counteract performance decay over time.

- Data Updates: Refresh datasets to keep outputs relevant and aligned with current contexts.

- User Feedback Integration: Collect feedback to identify improvement areas and refine model responses.

- Compliance Checks: Continuously ensure adherence to privacy and ethical standards.

- Error Logging and Analysis: Track errors and analyze logs to understand recurring issues and improve response accuracy.

- Version Control: Maintain records of model versions to track changes and assess the impact of updates.

- Automated Alerts: Set up alerts for key performance dips or compliance issues to address them promptly.

- Resource Optimization: Regularly assess and adjust computational resources to balance performance and cost efficiency.

- Security Audits: Conduct periodic security audits to protect data integrity and model reliability.

Watch our latest webinar on LLMs for Enterprise Success

Conclusion

Product Lifecycle Management (PLM) for LLM-based software is a strategic need in the fast-changing AI and software environment. Understanding and implementing the whole lifecycle sets your product up to succeed. It starts with careful planning and gathering requirements and goes on to precise model development, rigorous testing, and strategic deployment.

By integrating LLMs, teams can automate documentation, predict development bottlenecks, and improve code quality through advanced analysis. However, careful implementation and alignment with organizational goals are essential to harness their full potential. As LLMs continue to evolve, their role in PLM will further strengthen, paving the way for more adaptive, intelligent, and agile software development cycles that meet the demands of modern technology.

Calsoft is a leading technology-first partner and one of the pioneers in embracing Gen AI at an early stage. For over 25 years, Calsoft has been helping its customers solve their business challenges in various domains including AI/ML.