This article is originally published on TheNewStack. We are re-publishing it here.

Software-based services delivered to endpoints are composed of different sub-application components. Currently, any such services are using typical client-server architecture for its execution and using a traditional network that is either proprietary enterprise or broadband or dedicated leased lines. Until now, we have seen a huge transition to microservices based architecture to push for faster development time and independent management of small software modules using containers. Additionally, serverless functions have started to come into practice. All of these applications were designed for a network where latency and high throughput were not much of a concern.

But now, the time has come wherein applications will be written keeping more technical factors in mind, as the status of the network and on which node the application is going to execute.

As the network is evolving towards 5G, which will be software based, and will allow the applications to run near to the user devices or where data is going to get generated, the architecture and development of overall service (built using several software applications) should be transitioned to a new paradigm.

DOWNLOAD OUR EBOOK – A Deep-Dive On Kubernetes For Edge, focuses on current scenarios of adoption of Kubernetes for edge use cases, latest Kubernetes + edge case studies, deployment approaches, commercial solutions and efforts by open communities.

The ETSI standards body is created a working group around Multiaccess Edge Computing (ETSI MEC) to address these key requirements for the ideal edge computing environment. It has recently released a whitepaper for guidance on software development for edge cloud based on MEC.

ETSI MEC provides the required edge-computing framework to facilitate the new paradigm for service providers and application developers. MEC enables application developers to design the services in which applications in services can obtain information about its runtime environment, understand the characteristics of the environment to adjust the behavior of application for desired output. In addition, with MEC it is possible for applications to leverage bandwidth information to request necessary network resource in run time to provide low latency and high throughput in a controlled and predictable way.

Simply put, application component in a service designed to provide ‘X’ use case from the data center to end-user devices have different computing and performance requirements in its path, provided middle edge node is implemented. So it is imperative for software developers to architect the service in such a way that, at each layer, an application should change its behavior for specific operations and keeping network characteristics (traffic, security policies, etc) incorporated, and mostly in run time. MEC provides edge platform for this so that a software service provided to end user should up to the mark and optimize its execution based on the network ups and downs.

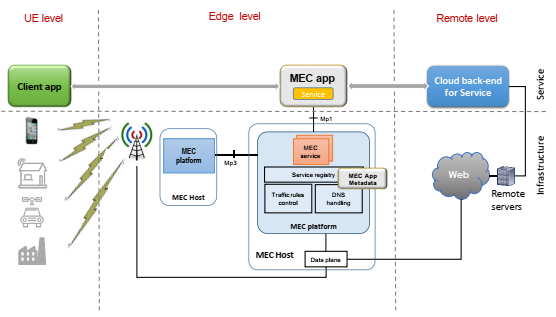

As per MEC whitepaper: “the end-to-end service can be split into three applications or components: terminal device component(s), edge component(s) and remote component(s).” These applications are managed at three different infrastructure layers. But, is this type of distributed deployment of service is suitable for developers of application components? Can application developers get an easy environment for rapid development and deployment at the edge of the network and central cloud?

Terminal device components are the lightweight application executed on the data generating device. Generally, low-level computational operations like managing data transmission, small tasks, etc are managed at this layer. Edge components are supposed to provide a wide range of capabilities for overall service delivery and should be highly sensitive with latency demands by terminal devices. Remote components or cloud can be used for tasks which did not need to have agile delivery clauses by service.

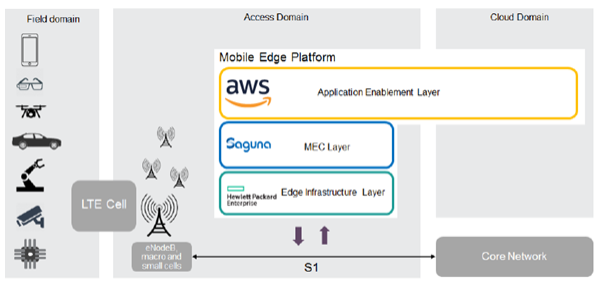

Last year, AWS, HPE and Saguna Networks collaborated on a PoC and released a whitepaper outlining its application enablement ecosystem using mobile edge computing. The focus of the whitepaper was to leverage existing mobile network infrastructure for establishing a platform to enable a new wave of revenue-generating applications for 5G. One solution utilizes AWS Greengrass, which brings AWS edge applications for MEC platform so that developers can engage easily to work on application development.

With this, platform service providers or enterprises can easily deploy MEC and manage edge applications within the fabric of their mobile network using Saguna Edge Cloud MEC software solution running on HPE’s Edgeline hardware.

In addition to AWS’ offering, Azure Stack is also available as an option with a variety of options for infrastructure and MEC layer. Recently, AT&T announced at Mobile World Congress 2019 about the PoC using Azure Stack

Summary

We have seen the revolution in software application development after containers arrived and CI/CD was started to adapt by IT firms. Now, as the focus of many enterprises and technology vendors started to shift to edge, a new architecture may evolve as distributed applications have a different environment with a different type of latency requirement. Managing such distributed applications that cross-platform boundaries are one of the most complex challenges facing the industry at this time. A goal of services in the edge-based environment should be to have the right application development environment in place for faster innovation for end consumers.