What is Medical Imaging?

Radiological sciences in the last ten years have advanced in a revolutionary manner, especially when it comes about medical imaging and computerized medical image processing. The diagnosis of diseases have significantly improved and become efficient using the exponentially developing multidimensional medical imaging modalities such as listed below

- X-Ray,

- CT Scan (Computer Tomography)

- SPECT (Single Photon Computed Tomography)

- PET (Positron Emission Tomography)

- Ultra Sound or Sonography

- MRI (Magnetic Resonance Imaging)

- fMRI (functional Magnetic Resonance Imaging)

These techniques help in the understanding of the disease as well as initiation and evaluation of ongoing treatment. Apart from this, the dataset of these images is used in further analysis of such diseases occurring around the world as a whole.

Computerized Medical Image Processing and Analysis

Heather Landi, a senior editor at Fierce Healthcare, writes in an article that IBM researchers estimate that medical images, as the largest and fastest-growing data source in the healthcare industry, account for at least 90 percent of all medical data.

We can use a computer to process and manipulate the multidimensional digital images of psychological structures in order to visualize hidden characteristic diagnostic features that are very difficult or perhaps impossible to see using planer imaging methods.

Smith Bindman writes about one of the research studies conducted that imaging use from 1996 to 2010 across six huge healthcare systems in the United States, covered 30.9 million imaging tests. The researchers found that over the study period, CT, MRI and PET usage increased by 7.8%, 10% and 57% respectively. This becomes a significantly large amount of data when you consider the number of radiologists around the world with the amount of data to be analysed obtained from the radiographic medical examinations. In order to resolve this problem, the Computerized Medical Image Process comes as a morning star, which we will be discussing in detail further in this article.

BioMedical Image Processing and Analysis

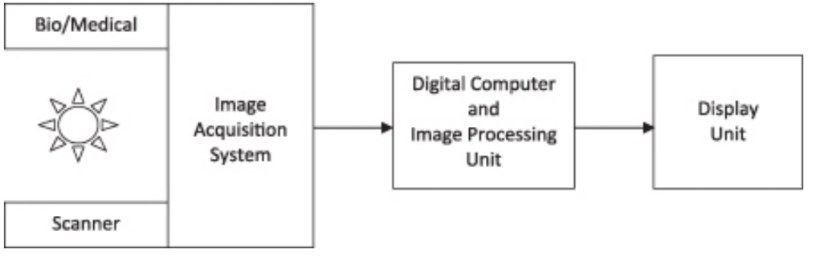

The BioMedical Image Process and Analysis system must consist of three components as listed below

- Image acquisition system

- A Computer with high configuration

- An Image display environment

The image acquisition system is used to transform the biomedical signal or radiation that is carrying the information to a digital image. The image is then represented by an array of numbers that can be processed by a computer in the form of a two dimensional or three-dimensional image. Each imaging modality uses a different image acquisition system as its imaging instrument. For example, an MRI will use an MRI Machine, which will use powerful magnets and radio waves to scan and form a digital image of the scanned parts of the body.

The second component of this system is a powerful computer that is needed to store and process these digital images. The high-level processors and GPUs are used for real-time image processing tasks for example image enhancements, pseudo-color enhancement, mapping, and histogram analysis.

The third component is basically an output device that is used to either display the image using a high-resolution display or possibly a printer to print out a hard copy of the medical radiographic examination results.

Deep Learning Applications in Medical Image Analysis

Machine learning has practically been everywhere in recent years, whether be it your phones or smart devices or Licence plate detection systems whenever you break traffic signals. Image analysis got tremendous success and has become quite a common feature, for example when you upload a group photo on Facebook, the Facebook face detection algorithm tag your friends in that photo automatically. Fortunately, some people thought about using that feature for the betterment of humanity. Well, actually it started way back from before the birth of social media, in late 70’s GOFAI (Good Old Fashioned Artificial Intelligence) were the first one to put a computer into the purpose of medical image processing. And by 1990’s models like active shape model and atlas model gained popularity in medical image analysis.

Conventional Neural Network in Medical Image Processing – Supervised Learning

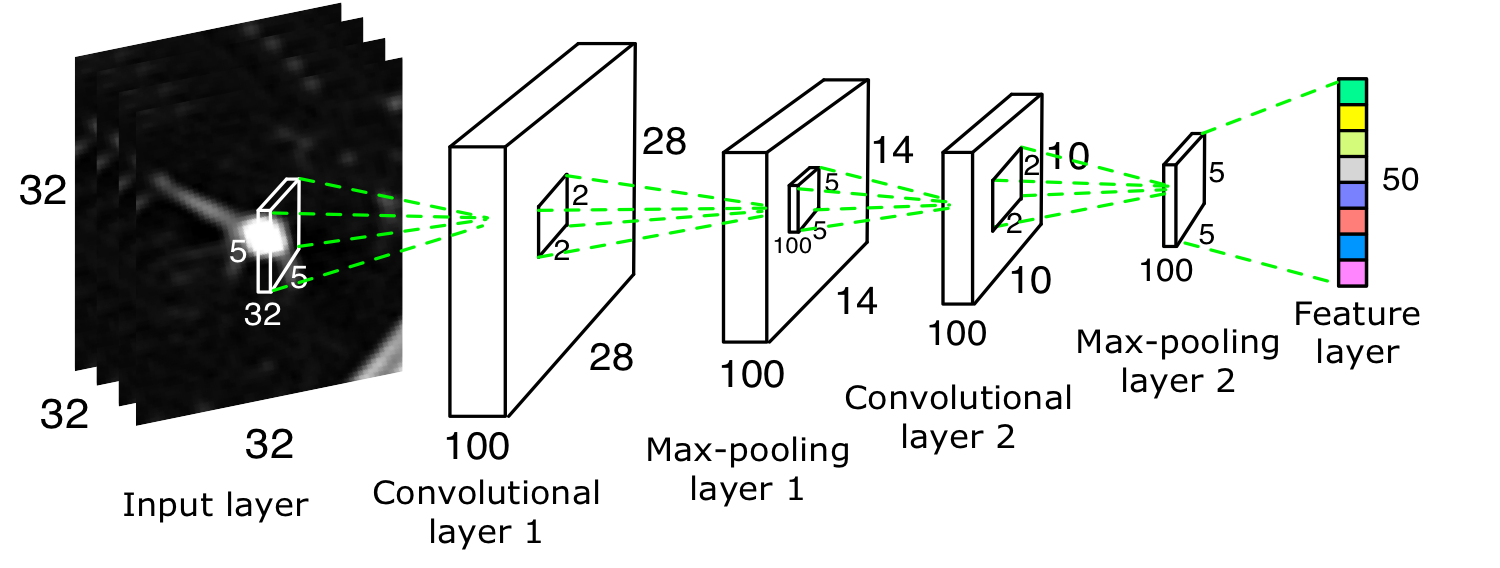

However, the most successful methods for medical image analysis has always been Conventional Neural Networks (CNNs). This method works on Layers and with each layer detection and recognition of edges, shallow features on shallow layers and deep features of deep layers. Here an image is convolved with filter and is sometimes called Kernels. Pooling is applied and after further processing, the recognizable features are obtained. The first real work application of CNN wasn’t seen until 1998 in LeNet (Handwritten Digit Recognition).

As described in the images above CNN Architecture consists of below listed Layers

- Convolution Layer: The Convolution can be defined as an operation using two functions. The first function consists of input values for example pixel values at a position in the image. And the second function is a Kernel or filter, these are represented as an array of numbers. The computations of these two products give an output. Then the filter is shifted to the next position in the image as defined by the stride length. This process is repeated until the complete image has been covered. Which produces an activation or feature map. The data obtained after this process is used to identify shapes, dots, body parts, etc. Feature maps are inputs for RELU layer in this architecture.

- Rectified Linear Unit (RELU) Layer: The Rectified Linear Unit is called as an activation function that sets all the negative input values to zero. It is used to simplify the computations and training.

- Pooling Layer: In order to reduce the number of parameters to be computed, or to reduce the size of the image by height and width (not depth), the Pooling Layer is inserted between the RELU layer and Convolution layer.

- Fully Connected Layer: Fully Connected Layer is the final layer of this architecture, and each and every neuron on this layer is connected to every other neuron of its preceding layers. There can be one or multiple fully connected layers based on the feature abstraction required. To summarize, the fully connected layer takes the combination of the most strongly activated features that would define that the image belongs to a particular class.

The other types of learning in conventional neural networks are Transfer Learning with CNNs and Recurrent Neural Networks (RNNs).

Applications of Deep Learning in Medical Image Analysis

- Classification: Here every exam is considered as a sample. Object or lesion classification is used to classify the image into two or more classes. For these tasks local as well as global appearance and location of the lesion is required for accurate classification.

- Detection: Localization of anatomical objects such as organs or lesions is an important pre-processing part of the segmentation task, 3D parsing of the image is required for localization of objects in an image.

- Segmentation: The task of segmentation is defined as identifying contour or object of interest (organ or lesion)

- Registration: Registration refers to fitting one image (which may be 2 or 3 dimensional) onto another. Image registration is used in neurosurgery or spinal surgery, to localize a tumor, in order to facilitate surgical tumor removal.