Data is one of an organization’s most valuable assets. But what happens when that data isn’t trustworthy or accessible across teams? Most companies must deal with unreliable, out-of-date, or redundant data.

According to Gartner, poor data quality issues can produce an additional $15 million in annual costs on average. It is not only about financial losses, but also affects other stages, such as less reliable analysis, poor governance and risk of non-compliance, loss of brand value, slowing down corporate growth, and more. Poor data quality costs organizations an average of $12.9 million. Businesses lose an estimated $3.1 trillion annually in the U.S. alone due to poor data quality. Embracing data quality best practices isn’t optional—it is a necessity.

Increasingly, companies realize that good-quality data are no more than a business and competitive need. However, investment in tools alone will not help. You will need a strategic approach.

Continue reading the blog to learn the six essential steps to effective Data Quality Management, right from setting standards to achieving a data-driven culture.

What is Data Quality Management (DQM)?

Data Quality Management, in simple words, refers to the systematic approach to maintaining high-standard data. It includes data governance, regular quality checks, and other practices.

DQM also provides policies for responsibly handling, storing, and sharing data, providing a robust framework for managing data throughout its life cycle.

Ultimately, DQM supports more reliable decision-making by reducing the likelihood of poor-quality data that could lead to errors or compliance issues.

Importance of Data Quality Management

Data Quality Management is a technical process and a critical business strategy. It is the backbone of any effective data governance strategy and ensures that data is always fit for its intended purpose.

- Reliable Decision-Making: Quality data ensures that business decisions are sound and complete based on accurate information.

- Risk Minimization: Errors resulting from data quality can lead to severe consequences. Proper management of data quality minimizes the risks of data inaccuracy.

- Operational Efficiency: DQM reduces the redundancy of data correction processes or extensive preparation of vast volumes of data.

- Customer Experience: By offering data accuracy, you can provide relevant and on-time interactions with more satisfied and loyal customers.

- Regulatory Compliance: Many industries have strict data management regulations. DQM enables organizations to stay compliant with industry standards and avoid costly penalties.

Read the blog to understand the significance of data to ensure a robust analytics strategy Guide to Analytics Strategy

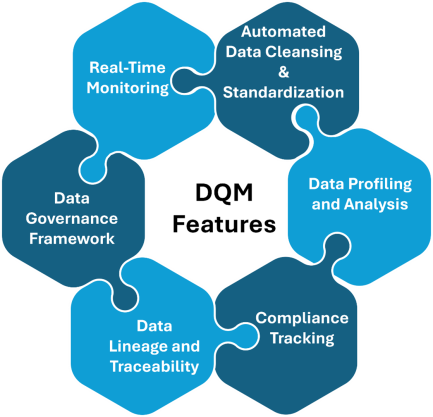

Key Features of Data Quality Management

DQM systems encompass several features that collectively ensure data integrity and reliability across the organization.

- Data Governance Frameworks: A data governance framework discusses the policies, roles, and responsibilities regarding data quality.

- Real-Time Monitoring: Modern tools for DQM also allow for real-time monitoring in error discovery.

- Automated Data Cleansing and Standardization: DQM generally includes automatic tools for removing duplicate data, correcting errors, and standardizing date and address format.

- Data Profiling and Analysis: Through data profiling, tools for data quality are allowed based on consistency, completeness, and accuracy.

- Compliance tracking: The majority of DQM solutions have compliance features, which implies tracking organizations’ adherence to industry-specific guidelines.

- Data Lineage and Traceability: Knowing where data originates and travels within an organization is essential to ensuring quality.

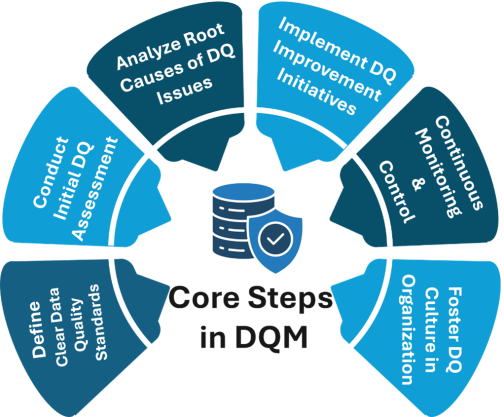

Core Steps in Data Quality Management

Now that we understand Data Quality Management, let’s examine the six core steps that make up a robust DQM framework. Each step builds on the previous, creating a comprehensive and continuous cycle for maintaining data quality.

Step 1: Define Clear Data Quality Standards

Setting standards is the core of any successful DQM strategy. This aids in defining standards against which your organization can measure data quality. These standards could be specific business needs and quality requirements the data meets.

Setting Data Quality Standards: Basic Elements

- Developing Data Quality Dimensions: You should create a core set of dimensions consisting of accuracy, completeness, consistency, validity, and timeliness. You can then use it to serve as the criteria against which you measure your data.

- Data rules and Metrics Development: Define what acceptable format, permissible values, and completeness criteria should be followed while creating data. You can use metrics like error rates, duplicate count, and consistency ratio to track how good the quality of the data is.

- Accountability through Governance: Identify definitions of role and responsibility for individuals who will hold organizational accountability for the quality of data.

Step 2: Conduct Initial Data Quality Assessment

Once you establish your standards, you need to begin your first evaluation. Doing so will give you a better understanding of the current state of data quality.

Essential Assessment Techniques

- Data Profiling: Data profiling tools can assess datasets for completeness, accuracy, and consistency. Profiling tools identify areas with missing or erroneous values and identify patterns that may indicate potential data quality problems.

- Baseline Metrics: Based on assessment results, baseline quality metrics are established. Accuracy percentages, completeness ratios, and error rates provide measurable starting points for tracking quality improvements over time.

- Gap Analysis: Compare data quality against defined standards and establish gaps. Based on the gaps, this analysis lets you rank the most important improvement work.

Doing a first-cut data quality assessment should now give you a clear picture of the situation. Furthermore, you will know precisely where to target quality improvements.

Step 3: Analyze Root Causes of Data Quality Issues

Analysis of the causes of data quality problems would be an integral part of any DQM strategy. After the initial assessment, you must understand the “why” behind each data problem. This prevents the same kind of problems from arising repeatedly and supports a proactive approach to data quality.

Key Components of Root Cause Analysis in DQM

- Identify Common Data Quality Issues:

- Problems such as missing data, inconsistent formats, duplicate entries, and outdated information often stem from specific data handling procedures.

- Example: Duplicate customer records might result from inconsistent department data entry standards.

- Utilize Root Cause Analysis Techniques

- Gap Analysis: Use gap analysis to identify issues between real data quality and stated standards.

- Fishbone Diagram (Cause-and-Effect Analysis): This diagrammatic tool helps trace the origin of data inconsistency through processes and systems.

- 5 Whys: Ask “Why?” repeatedly to drill down to the root cause for a data issue. This method is intended to find root systemic problems rather than presenting symptoms.

- Document Findings for Process Improvement

- Keeping detailed records of root causes allows for more precise improvements and prevents the same issues from resurfacing. For instance, if analysis shows that manual data entry errors are a frequent source of problems, automation can become a targeted solution.

If you know and address your root causes, your data quality efforts will become more robust and resilient, thus laying a platform for continuing improvement.

Step 4: Implement Data Quality Improvement Initiatives

Once you recognize the root problems, your next action should be acting. Improving data quality initiatives ensures error corrections, standardized formats, and consistency throughout the data.

Effective Data Quality Improvement Techniques

- Data Cleansing: This process removes inaccuracies, resolves inconsistencies, and adds missing values. For example, customer data may undergo deduplication and format standardization to ensure every entry is unique and uniformly formatted.

- Data Standardization: In a data set, formats of various things, such as date, address, and IDs, are standardized so that everything is in one pattern. By doing so, you can increase the comparability and usability of the data.

- Data Enrichment: Enrichment implies adding meaning to existing data by including supplementary information from reliable external sources. It benefits customer or geographic data, as you can do more analysis and targeting.

- Automate Data Validation and Cleansing: Automation is the key to maintaining data quality over time. Automated processes can validate incoming data, check for rule violations, and cleanse data at the point of entry.

With improvement measures in place, your data will be more reliable and valuable. This helps set the stage for more effective monitoring and control in the future.

Step 5: Continuous Monitoring and Control

Data quality monitoring is not something that you do once and then stop. It’s a continuous monitoring process so that you can identify and resolve any emerging issue immediately. This allows you to maintain the data in alignment with quality standards and respond to problems that affect critical decisions.

Essential Components of Data Quality Monitoring and Control

- Data Quality Metrics and KPIs: Determine critical metrics that will enable you to measure against your data quality standards, such as accuracy, completeness, and timeliness. For example, a KPI for customer data might be 95% accuracy for contact information, while a timeliness KPI might be in real-time updates for transactional data.

- Set Up Real-Time Alerts and Notifications: Real-time monitoring tools can alert data managers about discrepancies and breaches in data quality. You can set alerts based on tolerance thresholds applicable to specific data quality dimensions.

- Use Data Dashboards for Visualization: They show data quality metrics briefly, making it easier for stakeholders to identify trends, catch anomalies, and make decisions. Your teams can then have an at-a-glance view of the data’s health and prioritize improvements accordingly.

- Data Audits Regularly: Scheduled audits ensure the quality of data processes and pinpoint all areas requiring corrections. Such audits compare data to specified standards and document that compliance is achieved. Furthermore, it also suggests possible improvement measures at places with deviations.

Continuous monitoring ensures that the data quality remains high over time, even with constantly increasing and newly added data sets. This strategy allows you to notice problems in good time.

Step 6: Foster a Data Quality Culture in the Organization

The final step of DQM is often the most important for long-term success. It builds an organizational culture that emphasizes and respects data quality. Data quality cannot be solely owned by IT or data teams; you must share it among departments.

Building a Data Quality Culture

- Educate and Train Employees: Plan repeat training sessions to enlighten employees on data quality best practices. Anyone involved in handling data must know how their efforts contribute to general data quality.

- Assign Clear Accountability: Define data owners’ responsibilities within each business area so they can oversee quality activities based on applicable standards and regulations. Their roles enable the incorporation of quality control into specific areas and make it manageable.

- Incentivize Data-Driven Thinking: Embed data quality within your organization’s values. Encouraging your teams to make data-driven decisions based on high-quality data. Promoting success stories of data-driven improvements can build momentum and showcase the impact of quality data.

- Create a Feedback Loop for Continuous Improvement: Collect feedback from all departments about data quality issues and needs for improvement. Set up a channel where employees can raise concerns about data discrepancies or suggest enhancement opportunities in the data processes.

- Reward Data Quality Practices: Reward and recognize departments’ or employees’ commitment to data quality. This may include public recognition of performance-based incentives and reiterating the value of quality data within your organization.

By developing a data quality culture, you can create a solid foundation in which quality becomes an ingrained part of all operations. It empowers the employees to contribute to the continuous development of data quality. Thus, DQM becomes a core of organizational DNA.

Once the DQM process is in place at the underlying level, data quality maintenance should become your organizational resource.

Calsoft, as a technology-first company, deeply understands the critical role data plays in any business. In today’s ever-evolving tech landscape, where data is the new gold, cloud-based systems are pivotal in managing the exponential surge in data generation. The more data there is, the greater its usage across diverse systems. As data complexities grow, the need for Efficient Data Management becomes indispensable. Calsoft’s data engineering services are designed to empower businesses with actionable insights from all their data sources.

Download our success story to get more insights.

Enterprise Data Quality Management Implementation

Key Metrics for Data Quality Management

To effectively measure and improve data quality, you must establish a set of key performance indicators (KPIs). These metrics provide insights into the accuracy, completeness, consistency, and timeliness of data. Here are some essential metrics to consider:

| Core Metrics |

Description |

| Accuracy | Error Rate: Percentage of incorrect or invalid data.

Validation Error Rate: Percentage of data that fails validation checks. Accuracy Ratio: Percentage of accurate data compared to total data. |

| Completeness | Completeness Rate: Percentage of records with all required fields filled.

Missing Data Rate: Percentage of missing data. Duplicate Data Rate: Percentage of duplicate records. |

| Consistency | Data Consistency Ratio: Measure of how consistent data is across different sources.

Data Conformity Ratio: Measure of how well data conforms to predefined standards. |

| Timeliness | Data Freshness: Measure of how up-to-date data is.

Data Latency: Time taken for data to be updated. Data Delivery Time: Time taken to deliver data to end-users. |

| Validity | Data Validity Ratio: Measure of how valid data is according to business rules.

Data Type Validation: Measure of how well data conforms to defined data types. Range Check Validation: Measure of how well data falls within defined ranges. |

Advanced metrics such as uniqueness and security play a vital role in maintaining data quality. Uniqueness can be assessed through Unique Identifier Validation, which measures how distinct the identifiers within a dataset are. Security metrics include the Data Breach Rate, tracking the frequency of data breaches, and the Security Incident Rate, measuring the occurrence of security incidents. To effectively implement data quality metrics, organizations should first establish a Data Quality Framework that defines standards, roles, and responsibilities. Selecting metrics aligned with business objectives, employing data profiling tools to automate metric collection and analysis, and setting clear targets for each metric are crucial steps. Regular monitoring and a commitment to continuous improvement ensure that insights from these metrics drive corrective actions and foster ongoing enhancements in data quality.

By tracking and analyzing these metrics, you can gain valuable insights into the health of your data, identify potential issues, and take proactive steps to improve data quality.

Conclusion

The best aspect of good data quality is not that it is a nice thing to have; on the contrary, it supports all sorts of data-related successes.

We have seen the basic process within DQM and its six building blocks. They go on to fully form this basic building process, supplemented with further additional best practices, including governance, automation, and consistent auditing. Quality data empowers you to make correct, timely, and worthwhile decisions.

Are you up for putting these insights into action? Implementing DQM isn’t just a technical upgrade; it’s a strategic advantage. High-quality data enhances every aspect of your operations, from customer satisfaction to regulatory compliance, giving you a clear competitive edge.