Kubernetes works on the principle of assigning IP addresses to pods, called as “IP-per-pod” model. The IPAM (IP address management) task is left to third party solutions. Some of these solutions include Docker networking, Flannel, IPvlan, contive, OpenVswitch, GCE and others.

The Kubernetes architecture consists of master node, replication controller in addition (or conjunction) to nodes used to host the pods. Before we go ahead, here is a review of Kubernetes terms.

- Pods: Pods are the smallest deployable units that can be created, scheduled or managed. It is logical collection of containers which belong to an application.

- Master: Master node is the central control point which provides the unified cluster view. The single master node controls multiple nodes running the pods. It runs the API server, replication controller and the scheduler.

- Nodes: The servers that run workloads related to application. Pods are deployed on nodes. Nodes run containers using docker, interact with master using kubectl and facilitate service to node communication using node-proxy.

- Services: Service is an abstraction which defines logical set of pods. Services are used to run application on the pods.

- Replication controllers: Part of cluster orchestration which maintains required pods in healthy state.

- Labels: key-value tags that the system use to identify pods, replication controllers and services. e.g. In order to select all the pods, service with label “mysqldb” can be used.

Various communication modes are discussed below:-

- Container to container communication :-

In reality, routable IP addresses are assigned to pods. Even containers within the pod get the same IP address. While creating docker containers, –net=container option enables the use of network namespace from other containers. So, effectively all the containers in a pod get identical IP address. The Linux command “hostname –I” or “ip addr” can be used to get the IP address of the container. Since pods are mortal, this IP might change with time.The network configuration on all the containers look identical including the local loopback address(127.0.0.1), which is used for inter container communication. This helps in container communication running on the same host. If a container application wants to communicate to another container application, it can directly talk even when the ports are not exposed by the other container.# start first container with name cal_web using image cumulusLinux. docker run –i -t –name=cal_web cumulusLinux bash # inside the container, note the IP address. [root@cumulus /] ip addr 1: lo: <LOOPBACK, UP> mtu 16436 qdisc noqueue Link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 brd 127.255.255.255 scope host lo 2: eth0: <BROADCAST, MULTICAST, UP> mtu 1500 qdisc pfifo_fast qlen 100 link/ether 00:80:c8:f8:4a:51 brd ff:ff:ff:ff:ff:ff inet 172.17.0.35/16 scope global eth0 # start next container. docker run –i -t –net=container:cal_web cumulusLinux bash # Inside the container, IP address remains same due to sharing of network namespace. [root@cumulus /] ip addr 1: lo: <LOOPBACK, UP> mtu 16436 qdisc noqueue Link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 brd 127.255.255.255 scope host lo 2: eth0: <BROADCAST, MULTICAST, UP> mtu 1500 qdisc pfifo_fast qlen 100 Link/ether 00:80:c8:f8:4a:52 brd ff:ff:ff:ff:ff:ff inet 172.17.0.35/16 scope global eth0

- Pod to pod communication :-

Services are used to abstract the pods at individual compute level. Hence, multiple pods can be treated much like VMs or physical hosts of a cluster. The pod running inside a service can communicate to other pod running inside any other service using the virtual IP address of the service. This is an IP based communication happening at the L3 layer. The IP address of the pod from inside (container side) and from outside (service side) is the same. This effectively makes a flag IP address space where address translation or NAT is not required.Whenever the kubernetes API server starts, the service endpoint gets its IP address from the portal net range specified on the API service. This portal net range specified in CIDR format is used to assign the virtual IP addresses to the services. This address has local significance to the host so make sure it doesn’t clash with the docker0 bridge IP address, i.e. Service IP range should not conflict with local IP address. - Service to pods (bi-directional) communication :-

A service running on pods exposes itself using the NodePort combination. It exposes the virtual IPs assigned by the portal net. Kube-proxy is a process running on all the host machines in a cluster. It provides simple network proxy and load balancing capabilities.- Whenever a client accesses the virtual IP of the service, kube-proxy intercepts it.

- Kube proxy programs iptables rules to trap access to virtual IP address of the service and redirects the request.

- Kube proxy transparently proxies the client request to the pods grouped by that service.

- Kube proxy selects the correct pod out of all the service pods based on round robin or service affinity based algorithm.

Services and kube-proxy are like distributed multi-tenant load-balancer. Each node load balances traffic from clients on that node using iptables. The portals IPs are virtual and they should never be used outside a physical network (cluster network).

- External world communication :-

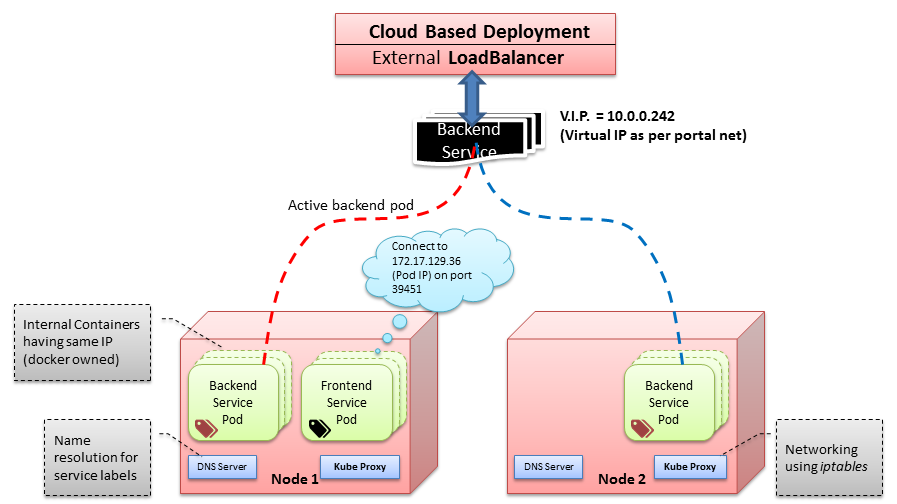

Following figure describes an example of k8s networking architecture.

IP addresses assigned to services (using portal net) are not routable from outside the cluster, as those are private (IPv4 class C) addresses. For accessing a service from outside the cluster, an external load-balancer needs to be used. The services exposed from inside the cluster are mapped to external load balancer. This external load balancer might be part of cloud provider’s network.

When client traffic comes to a node through the external load balancer, it gets routed to appropriate service. The kube-proxy uses iptables rules to redirect the request to appropriate backend pod. This is performed by iptables rules configuration.sudo iptables -t nat –L Chain KUBE-PORTALS-CONTAINER REDIRECT tcp -- anywhere 10.0.0.242 tcp dpt:http-alt redir ports 39451 Chain KUBE-PORTALS-HOST DNAT tcp -- anywhere 10.0.0.242 tcp dpt:http-alt to:172.17.129.36:39451

For some external load balancers like Google’s Forwarding Rules, request might get re-routed to another pod within the same service which is called “request double bounce”.

If the pods are assigned labels, the request coming to kube-proxy is resolved using DNS pod.

In short, kubernetes provides cluster orchestration with networking options open. You should adhere to following:-- All pods in a cluster should be part of same broadcast domain.

- For external access, the load balancer should translate the request to appropriate service.

[Tweet “#Kubernetes Networking Internals ~ via @CalsoftInc”]

IP addresses assigned to services (using portal net) are not routable from outside the cluster, as those are private (IPv4 class C) addresses. For accessing a service from outside the cluster, an external load-balancer needs to be used. The services exposed from inside the cluster are mapped to external load balancer. This external load balancer might be part of cloud provider’s network.

IP addresses assigned to services (using portal net) are not routable from outside the cluster, as those are private (IPv4 class C) addresses. For accessing a service from outside the cluster, an external load-balancer needs to be used. The services exposed from inside the cluster are mapped to external load balancer. This external load balancer might be part of cloud provider’s network.